At the GSE UK Conference, Gary Thornhill, founder and CEO of PopUp Mainframe, shared the journey behind his company and how it emerged as a response to the challenges faced during the COVID-19 pandemic. In this interview, he discusses how PopUp Mainframe is revolutionizing mainframe accessibility, addressing industry pain points like environment bottlenecks, skill gaps, and innovation barriers while embracing sustainability and hybrid computing solutions.

Show and Tell – CEO Insights from PopUp Mainframe

Hello, my name is Gary Thornhill, and I am the founder and CEO of PopUp Mainframe. My career has been a bit of a journey. I started out in mainframe operations, which later expanded into middleware. From there, I moved into middleware and led a company that focused on DevOps. The idea for PopUp Mainframe actually came about during the COVID-19 pandemic. At the time, I was the CEO of Sandhata Technologies, a DevOps consultancy. Like many others, we faced the challenge of senior, highly skilled consultants being let go from key client accounts as businesses tightened their budgets. This gave us an opportunity to think differently. One of our clients needed a way to quickly create environments for their work, and we realized there was a broader problem to solve. That’s when PopUp Mainframe was born. It was a solution designed to address the growing need for speed, accessibility, and innovation in mainframe.

What are some of the current mainframe challenges that PopUp Mainframe addresses?

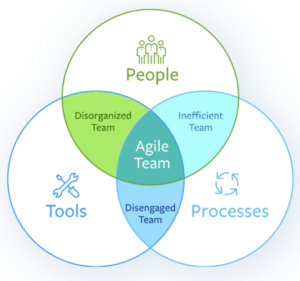

The challenges I see in mainframe organizations are often more about the way they’re set up than the technology itself. A lot of organizations still operate with siloed teams, and many rely heavily on outsourcing. This makes it difficult for businesses to innovate quickly. On top of that, mainframes often have static environments—ones that can’t be easily spun up or down—which limits flexibility.

PopUp Mainframe directly addresses these issues. For example, we allow organizations to create temporary environments in less than ten minutes, whether on-premises or in the cloud. This eliminates the bottleneck of waiting for new environments to be set up. Our platform is also designed to be user-friendly; you don’t need to be an expert in green-screen interfaces to be productive. This accessibility opens up the mainframe to more people, solving both the shortage of environments and the skills gap in the process.

How has the mainframe evolved, and what role do innovative technologies play in this space?

When I started in the industry, roles within mainframe teams were very specialized. You’d have one group managing Db2, another handling IMS, and others focused on operations or automation. Today, that has shifted. People are expected to wear multiple hats, often blending mainframe and distributed skills. For example, a developer might now work across mainframe and Linux environments.

This evolution has been driven in part by initiatives like the Open Mainframe Project, which fosters collaboration and innovation in the community. A great example of this is automated testing. Many organizations are still relying on manual testing, or worse, skipping unit testing altogether. Through PopUp Mainframe, we’ve been working with the Open Mainframe Project to advance frameworks like Galasa, which allows distributed testing tools—such as JUnit or Selenium—to be used on the mainframe.

This kind of innovation is critical. Automated testing not only speeds up development but also reduces the cost of change, making mainframes more competitive and easier to maintain.

Do companies without mainframes benefit from adopting the technology?

It’s a fascinating question. While mainframes are often associated with legacy systems, they’re incredibly relevant in today’s world of big data and high-performance processing. In fact, I’ve seen new clients—organizations that have never used mainframes before—embracing the technology.

Mainframes are unmatched when it comes to reliability and processing power. Parallel Sysplex, for instance, has been around for 30 years and remains the only true hot failover system. If you have high processing needs, there’s simply no better platform. Plus, mainframes are incredibly sustainable, with the lowest cost per transaction compared to other technologies.

PopUp Mainframe offers a way for organizations to explore the benefits of mainframe technology without making massive upfront investments. For businesses looking to test new approaches or handle large volumes of data, the mainframe is still the gold standard.

How does PopUp Mainframe support green tech initiatives?

PopUp Mainframe supports green tech in a couple of ways. First, our platform is literally “on-demand” and systems can be turned on then turned off when they’re not in use. Traditionally, mainframe environments tend to sit idle, gathering dust and accumulating technical debt. By adopting a “switch it off” mentality, organizations can dramatically reduce energy consumption and optimise their resources.

On our end, we’ve taken steps to ensure our own operations are as sustainable as possible. For instance, we source data centers with green initiatives like solar-powered servers. It’s a small but meaningful step, and it aligns with our commitment to greener IT practices.

It’s also worth noting that every digital action has a carbon footprint. Checking your bank balance, for example, uses energy. Most people don’t think about this, but by making IT systems more efficient, we can help reduce the overall environmental impact. PopUp Mainframe is part of the Sustainable IT organization, and we’re pushing for broader changes across the industry.

What’s the biggest misconception about mainframes?

The biggest misconception is that mainframes are outdated dinosaurs. In reality, they’re anything but. Mainframes can do just about everything distributed systems can—and often better.

Take z/OS Connect, for example. It allows mainframes to host web services. Db2 is another great example. It’s an incredibly powerful database, but many organizations aren’t taking full advantage of its capabilities.

The issue isn’t with the technology itself but with how it’s perceived. Organizations need to focus on cultural change—encouraging teams to embrace the smart, innovative tools that are already available on the mainframe.

Can you share one of your clients’ success stories?

We’ve had the privilege of working with some incredible clients. One that stands out is a UK insurance company. They’ve completely transformed their developer experience by using PopUp Mainframe. With tools like VS Code and modern CI/CD pipelines, their developers can now work faster and more efficiently. It’s made the mainframe an attractive platform for innovation, especially for cutting-edge work.

Another client, who runs their mainframe alongside Windows applications in Azure, has seen similar success. Thanks to our partnership with Delphix (Perforce), they can perform end-to-end testing with referential integrity. This level of quality testing has significantly improved their release cycles, allowing them to deliver changes much faster and with greater confidence.

What emerging industry trend excites you the most?

Artificial Intelligence (AI) is incredibly exciting. Tools like Watson Code Assistant and BMC’s Code Insights have the potential to transform how we work. Imagine being able to query vast amounts of documentation and instantly find answers—it’s a game-changer for productivity.

That said, I think it’s important for organizations to approach AI thoughtfully. There’s a lot of hype right now, and it reminds me of the early days of cloud computing. Companies need to clearly define what they hope to achieve with AI, rather than jumping on the bandwagon. Used correctly, AI can solve significant challenges, but it’s not a one-size-fits-all solution.

What’s next for PopUp Mainframe?

We’re incredibly excited about what’s on the horizon for PopUp Mainframe. At the moment, our platform runs on x86 architecture—this includes environments like AWS, Azure, and on-premises virtual machines. But we’re taking things a step further by working on running PopUp Mainframe directly on IBM’s Integrated Facility for Linux (IFL) and LinuxONE.

This development will be a game-changer, especially for larger enterprises. It means organizations will have the flexibility to deploy PopUp Mainframe either on traditional x86 setups or directly on the physical mainframe. For example, they’ll be able to leverage the agility of Delphix (Perforce) virtualization, which allows for forward and rewind capabilities on multiple PopUp Mainframes. This creates an ideal hybrid scenario, where businesses can experiment with cloud-based solutions while still maintaining the reliability and power of their physical mainframes.

In essence, our goal is to offer clients the freedom to operate in a mixed environment, balancing the best of both worlds while keeping their infrastructure modern and adaptable.

How can PopUp Mainframe solve the industry’s talent challenges?

The skills gap in the mainframe industry is a pressing concern, and I firmly believe PopUp Mainframe plays a crucial role in addressing this challenge. We’ve already started working with a few North American universities to introduce students to the platform.

The key lies in making the technology approachable. With PopUp Mainframe, you can do everything you would on a traditional mainframe, but with tools that younger generations are already familiar with—like Eclipse-based GUIs and VS Code. This lowers the learning curve and removes the intimidation factor often associated with mainframes.

Today’s graduates care deeply about making an impact. They’re less focused on the technology itself and more interested in what it can achieve. PopUp Mainframe aligns with that mindset by allowing them to quickly implement ideas, make code changes, and bring new functionality to life. Imagine telling a young developer they’ll have to wait a week for a Db2 update—they’d be pulling their hair out! By contrast, our platform enables near-instantaneous changes, which keeps the momentum going and fosters creativity.

The idea of the “big, scary mainframe” from sci-fi films of the 70s and 80s is outdated. With PopUp Mainframe, we’re helping to reframe that perception and show that mainframes can be just as user-friendly and exciting as any other modern technology.

What does it take to get started with PopUp Mainframe?

Getting started with PopUp Mainframe is remarkably straightforward. You can either download our compressed image—now just 60 GB—or access it through Azure. Once downloaded, the setup process takes about ten minutes.

We’ve also created the PopUp Manual, a detailed guide that walks users through every step of the process. It covers everything from connecting PopUp Mainframe to your physical mainframe to migrating data and configurations.

The biggest hurdle isn’t the platform itself—it’s navigating organizational processes to gain access to infrastructure. That’s often where delays occur. However, once PopUp Mainframe is up and running, you can hit the ground running.

Our platform also offers flexibility when it comes to security. For example, in certain scenarios, you can start without full RACF or ACF2 profiles. This allows you to quickly set things up, make changes, and save them to disk. Later, if necessary, you can provide a more secure copy for broader organizational use.

Ultimately, PopUp Mainframe is just another mainframe—only faster, more agile, and easier to use. It allows teams to utilize their existing skills while bringing in distributed expertise, particularly in areas like testing and automation. It’s the perfect balance of familiarity and innovation.

This transcript is from an interview with Gary Thornhill, conducted by Planet Mainframe at the GSE Conference 2024. Watch the full interview here.